D1.1 Technology survey: Prospective and challenges - Revised version (2018)

4 ICT based systems for monitoring, control and decision support

4.13 Machine-to-Machine Model for Water Resource Sharing in Smart Cities

Taking into consideration the possibilities offered by the ICT technologies and the critical problems in water management field, a model of M2M device collaboration is proposed. The main purpose is optimization of water resource sharing. This model represents a M2M integration between RoboMQ (messsage broker) and Temboo (IoT software toolkit) to coordinate the distribution of the same available water resource when several requests are made at the same time. We use the following methods:

Use labeled queues to differentiate between messages (data values), therefore evaluating the greater need before sending the commands to the actuators;

Tune prameters for obtaining a generic water saving mode which the user can set when receiving several notification alerts of water shortage (expand for usage on large scale-e.g. city scale) Targeted at/ Use Cases;

Regular end-users for better management of household or small facilities water resources farms, rural houses, residences with their own water supply, zoo/botanic gardens;

Authorities for better management of single city water resources in critical situations prolonged water shortage, prolonged repairs to the water infrastructure, natural disasters.

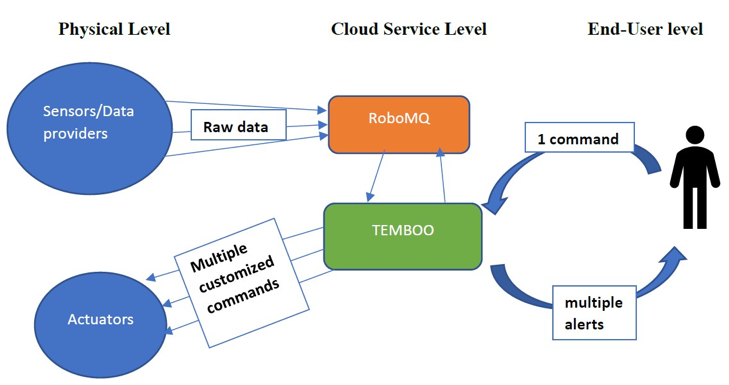

Figure 20. Machine-to-Machine Model for Water Resource Sharing in Smart Cities. Proposed architecture.

The architecture of the proposed model in presented in Fig. 20. The architecture is structured on three levels: Physical level, Cloud Service level and EndUser level. At Physical level exist sensors that transmit raw data to a RoboMq service, and actuators that receive multiple customized commands form a TEMBOO service. Al Cloud Service level exist two systems ROBOMQ that receive data from sensors and TEMBO that send commands at physical level. The top level in End-User level that take as input commands form users and receive multiple alert form physical and cloud service level.

In order to be able to build a solution for the M2M model proposed, the elements needed in the integrations have to be identified. The intention is to integrate two different entities, one being a system of sensors and actuators and the other one a mobile/desktop application that offers the possibility of receiving a notification/message alert but also of giving back a response. The communication between the two systems, or better said, between the system and the end-user can be done through a Message Oriented Middleware (MoM), while the flows of action can be implemented into microservices (e.g email alert microservice).

RabbitMQ has been chosen as a MoM for a performance analysis in order to confirm if this type of middleware is suitable for the model proposed.

RabbitMQ is a message-queueing software usually known as a message broker or as a queue manager. It allows the user to define queues to which applications may connect and transfer messages, along with the other various parameters involved.

A message broker like RabbitMQ can act as a middleman for a series of services (e.g. web application in order to reduce loads and delivery times). Therefore, tasks which would normally take a long time to process can be delegated to a third party whose only job is to perform them. Message queueing allows web servers to respond to requests in a quick way, instead of being forced to perform resource-heavy procedures on the spot. Message queueing can be considered a good alternative for distributing a message to multiple recipients, for consumption or for balancing loads between workers.

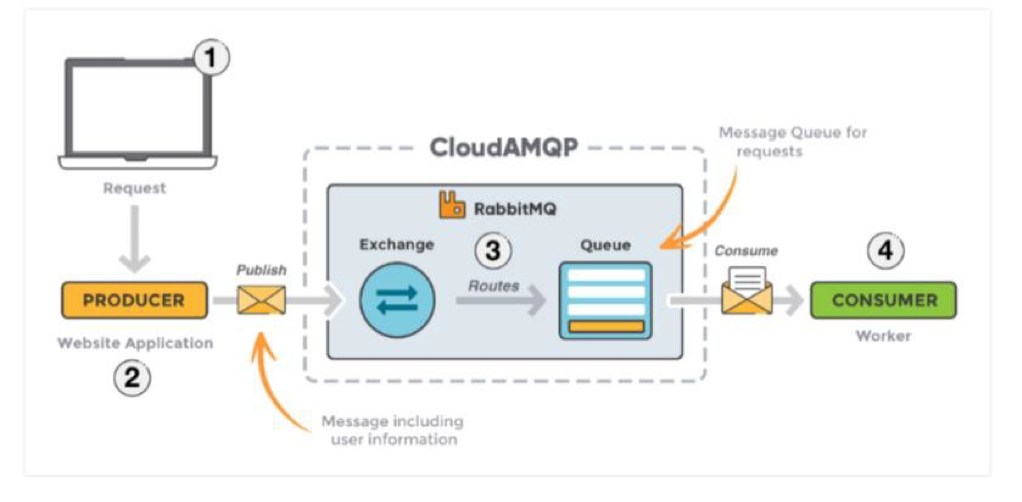

The basic architecture of a message queue is based on several elements: client applications called producers that create messages and deliver them to the broker (the message queue), other applications called consumers that connect to the queue and subscribe to the messages. Messages placed in the queue are stored until the consumer retrieves them.

Any message can include any kind of information. It could have information about a process that should start on another application (e.g. log message) or it could be just a simple text message. The receiving application processes the message in an appropriate manner after retrieving it from the queue. Messages are not published directly to a queue, but, the producer sends messages to an exchange which is responsible for the routing of the message to different queues. The exchange routes the messages to message queues with the help of bindings (link) and routing keys [RabbitMQ Website documentation, 2018].

The message flow in RabbitMQ contains the following elements (Fig. 21):

Producer: Application that sends the messages.

Consumer: Application that receives the messages.

Queue: Buffer that stores messages.

Message: Information that is sent from the producer to a consumer through RabbitMQ.

Connection: A connection is a TCP connection between your application and the

RabbitMQ broker.

Channel: A channel is a virtual connection inside a connection. When you are publishing or consuming messages from a queue it's all done over a channel.

Exchange: Receives messages from producers and pushes them to queues depending on rules defined by the exchange type. In order to receive messages, a queue needs to be bound to at least one exchange.

Binding: A binding is a link between a queue and an exchange.

Routing key: The routing key is a key that the exchange looks at to decide how to route the message to queues. The routing key is like an address for the message.

AMQP: AMQP (Advanced Message Queuing Protocol) is the protocol used by RabbitMQ for messaging.

Users: It is possible to connect to RabbitMQ with a given username and password. Every user can be assigned permissions such as rights to read, write and configure privileges within the instance. Users can also be assigned permissions to specific virtual hosts.

Vhost, virtual host: A Virtual host provides a way to segregate applications using the same RabbitMQ instance. Different users can have different access privileges to different vhost and queues and exchanges can be created so they only exist in one vhost.” [RabbitMQ Website documentation, 2018].

Figure 21. RabbitMQ architecture.

Test Performance on RabbitMQ. The aim of this test is to assess/analyze the performance of RabbitMQ server under certain imposed conditions. In order to run the tests a CloudAMQP instance hosting RabbitMQ solution will be used. RabbitMQ provides a web UI for management and monitoring of RabbitMQ server. The RabbitMQ management interface is enabled by default in CloudAMQP.

Steps performed for setting up CloudAMQP:

1) Create AMQP instance (generates user, password, URL). A TCP connection will be set up between the application and RabbitMQ

2) Download client library for the programming language intended to be used (Python): Pika Library

3) Modify Python scripts (producer.py, consumer.py) to:

open a channel to send and receive messages

declare/create a queue

in consumer, setup exchanges and bind a queue to an exchange, consume messages from a queue

in producer, send messages to an exchange, close the channel. The scripts are attached in Annex.

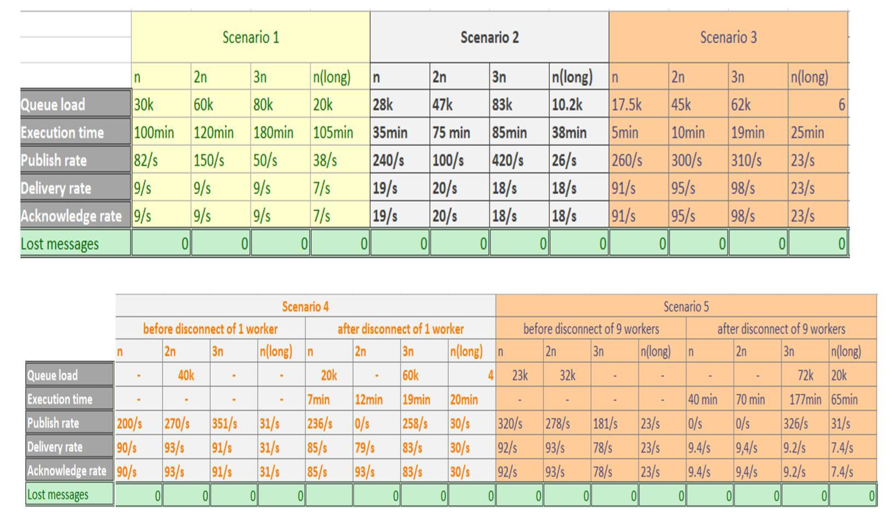

Parameters monitored: Queue load, publish message rate, Delivery message rate, Acknowledge message rate, Execution time, Lost messages, Memory usage as can been seen in Fig. 22.

Figure 22. RabbitMQ test scenarios.

Observations based on results. When having only one consumer, the Queue load value increases proportionally with the number of messages sent (n-30k -> 2n-60k -> 3n-80k). Queue load is reduced with approximately 15% when increasing the number of consumers from 1 to 10. Execution time is reduced with approximately 70% when doubling the number of consumers. Publish rate is directly influenced by the size of the message being sent but is independent from delivery rate. Queue load is directly influenced by the delivery rate. Delivery rate is increased proportionally with the number of consumers, when sending a short message (1 consumer-9/s -> 2 consumers-18/s -> 10 consumers-91/s). When sending long messages and having multiple consumers, publish rate and delivery rate have close values, hence queue load is very small. The time needed for the message to be published is almost the same with the time needed for the message to be sent and acknowledged. When sending long messages and having one consumer, the same theory as in the short message case is applied, queue load is remarkably increased (6 to 20k) and delivery rate is lowered to a value smaller than the rate per user receiving short messages (30/s to 7/s). When killing one or multiple consumers in the the send/receive process, the messages are redirected to the other running consumers. No other messages are lost, except for the ones that were already acknowledged by the consumer which was disconnected. Messages are not equally distributed to multiple consumers, but the values are similar enough (e.g for 10 workers: 3030, 3005, 3014, 2966, 2998, 2995, 3023, 2978, 2988, 3002). When having a send/receive process without acknowledgement, queue load is 0, as the messages are continuously sent, without waiting for a response from the consumer. This approach is risky, as the user has no information about possible lost messages.

Valuable features: RabbitMQ offers an efficient solution for message queuing, easy to configure and integrate in more complex systems/workflows. It can withstand and successfully pass stress load bigger than 10k calls and it decouples front-end from back-end.

Room for improvement: The most common disadvantage is related to troubleshooting, as users have no access to the actual routing data process. A graphical interface or access to inner parameters would be useful when dealing with large clusters.

Microservices. The term "Microservice Architecture" describes a particular way of designing software applications as series of independently deployable services. This new software architectural style is an approach to developing a single-bulk application as a suite of small services, each of which are running its own process and communicating with lightweight mechanisms (most commonly HTTP resource API, since REST-Representational State Transferproves to be a useful integration method, as its complexity is lower in comparison with other protocols.[Huston, 2018]) These services are built around business capabilities and are can be deployed independently by fully automated deployment machines [Fowler, 2018].

Basically, the each microservice is self-contained and includes a whole application flow (database, data access layer, business logic) while the User Interface is the same.

One of the most important advantages of microservices is that they are designed in order to cope with failure. They are an evolutionary model, which can accommodate service interruptions. If one micro-service fails, the other ones will be still running. [Huston, 2018] Also, when there is need for a change in a certain part/functionality of the only the microservice in cause will be modified and redeployed. There is no need to change and then redeploy the entire application.

If a functionality needs to be cloned on different nodes, only the specified microservices will be cloned, and not the entire action flow of the application. This offers better scalability and better resource management.

Although microservices are supposed to be as self-sufficient as possible, a large number of microservices can lead to barriers in obtaining information/results if this specific information has to travel through. Mechanisms of monitoring and management of the microservices have to exist or to be developed in order to orchestrate and maintain the efficiency level higher than the effort/fault level.

Middleware service platforms which offer microservice integration have tools for managing and monitoring microservices and well as for building their flow and the communication between them. With the help of IoT integration, these are able to further communicate with data gathering sensor systems or to send command messages to actuators from active systems. Such platforms are IBM Cloud, RoboMQ or Temboo which provide solutions that will be discussed below in this paper.

IBM Cloud solutions –offers a complete integration platform with all resources for creating, monitoring and manipulating a Web Service, Microservices or IoT connections. Relevant elements are detailed below. So-called Functions (see Fig. 23) allow connectivity and data collection between physical sensor systems, cloud database and end-user. The functions communicate through messages (MoM middleware).

Figure 23. IBM Cloud Functions.

Event providers (see Fig. 24), from which Message Hub, Mobile Push and Periodic Alarm are needed.

Figure 24. IBM Cloud Event Providers.

Languages supported (see Fig. 25), from which implementation choice: Python.

Figure 25. IBM Cloud languages.

IoT simulator enables testing the whole flow with randomly generated or chosen values for the simulated sensors. This offers the chance of separating the work of sensor connectivity from the work on the actual action flow and also leaves a lot of space for optimizations and use case testing.

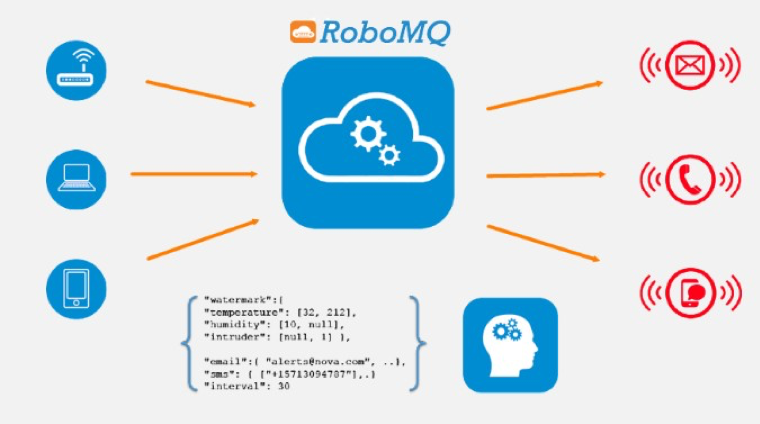

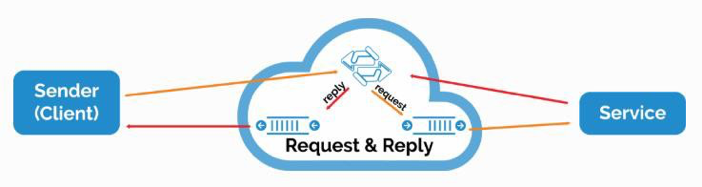

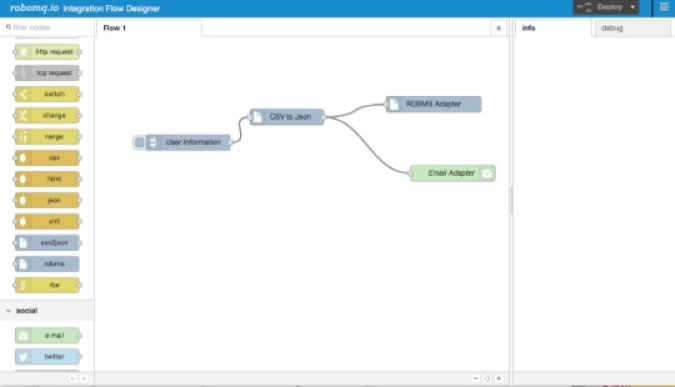

RoboMQ solutions offers an integration platform with easy-deployable microservices and RabbitMQ message-oriented middleware. Relevant elements are: AMQP with Request-Reply (see Fig. 26), Integration flow designer (see Fig. 27), Data Driven Alerts (see Fig. 28) – offers real time alerts to user depending on data obtained from devices and analyzed through machine learning rules/algorithms, a device simulator – offers the possibility of implementing and testing the workflow without actually connecting the physical system to the cloud. Similar to the IBM simulator, it offers value generation for specific parameters and a time setting which would resemble the real sensor system.

Figure 26. AMQP with Request-Reply.

Figure 27. Integration flow designer.

Figure 28. Data Driven Alerts.