D1.1 Technology survey: Prospective and challenges - Revised version (2018)

This document presents a technology survey for smart data and services that support water management. The technology survey is elaborated by the UPB team in collaboration with the partner institutions from this project.

The survey consists of eight sections and the References. The first section, the Introduction, define the main concepts and terms that will be used in this documents, such as hydroinformatics. The second section, Data, describes the main research directions and challenges regarding data gathering, storing, processing and sharing for the Water Management systems. Several ICT technologies that support such management systems are described, such as streaming data analytics and MapReduce models. The third section is dedicated to Water Models. Two main paradigms for aquatic modelling are discussed here: physically-based and data-driven modelling. The next section provides an overview of the most performant ICT based systems that offers functionalities such as monitoring, control and decision support for the Water Management environment. Section five analyses the implications of worldwide non-government organizations in the water management field. Also, here the focus is on the citizen or community science in the context of the European community and, in particular, in Romania. Section six deals with specific standards needed for the hydroinformatics systems. This is an important topic due to the heterogeneity of the systems’ components. In Section 7, priority areas, challenges and research directions in FP7 and H2020 projects are discussed. A list with water related FP7 and H2020 projects is provides, along with their main objectives and topics. The last section provides future research directions and water related subjects that are correlated with the priority areas listed in HORIZON 2020.

| Site: | DATA4WATER |

| Course: | Deliverables |

| Book: | D1.1 Technology survey: Prospective and challenges - Revised version (2018) |

| Printed by: | Guest user |

| Date: | Thursday, 3 April 2025, 12:27 AM |

Table of contents

- 1 Introduction

- 2 Data

- 3 Water Models

- 4 ICT based systems for monitoring, control and decision support

- 4.1 Integrated Water Resource Management

- 4.2 Event-based monitoring of in stream processes

- 4.3 Technologies for near-real-time measurements, leakage detection and localization

- 4.4 Cloud Architecture – Datacenters and in-memory computing

- 4.5 Mobile Cloud computing

- 4.6 IoT trends and relation to Water Management

- 4.7 Fault tolerance - faults are the norm not the exception

- 4.8 Integrated approaches for watershed management

- 4.9 Information-centric systems (ICS) for watershed investigation and management

- 4.10 Supervisory control and data acquisition systems

- 4.11 Decision Support Systems

- 4.12 Anomaly detection on smart sensors data used in water management

- 4.13 Machine-to-Machine Model for Water Resource Sharing in Smart Cities

- 4.14 Reinforcement Learning for Water Management

- 5 Participatory / citizen science for water management

- 6 Secure Smart Water Solutions

- 7 Standards: INSPIRE and OGS

- 8 Priority areas, challenges and research directions in FP7 and H2020 projects

- 9 Further research directions

- 10 References

1 Introduction

This survey aims to identify and refine the research directions in ICT for Water Management, focused on the selected priority areas of HORIZON 2020. During the last couple of decades many topics from this filed have been addressed within the discipline known as Hydroinformatics. Therefore, the survey includes details about the results obtained so far in Hydroinformatics research, providing a comprehensive understanding of the current state of the art. Furtherrmore the survey focuses on smart data driven e-services in water resource management.

Hydroinformatics addresses the problems of aquatic environment with the purpose of proper water management by means of ICT. It integrates water data obtained from a large variety of sources, elaborates models, extracts knowledge about water, and offers the results to stakeholders. Water-related disciplines, such as hydraulics, hydrology, water quality and aquatic ecology are brought together with various ICT tools to ensure the whole information cycle in water management. This is primarily achieved by developing innovative integrated tools for data management, water modelling, forecasting and decision support. Hydroinformatics also recognizes the social aspects of water management problems, the challenges of decision making and stakeholder involvement when dealing with this shared resource. Increasingly the focus is on developing tools for integration of data, models, and people to support these complex water management challenges (see Journal of Hydroinformatics, What is Hydroinformatics and Hydroinformatics).

The structure of this survey follows the flow of information in Hydroinformatics systems. The first section is about data and refers to issues related to data sources, data processing (with focus on data uncertainty), and ICT support and technologies for data management (see Section 2).

The second section is dedicated to water modelling. After the presentation of the models categories, there is brief introduction of physically-based models, while the data driven models are discussed in more details (see Section 3).

Another section is an extended presentation of the state of the art in ICT based systems for monitoring, control, and decision support. Important topics related to advanced technologies for monitoring, near-real-time measurements, data storage and processing, integrated approaches for water resource management, decision support systems, and others are taken into account (see Section 4).

The analysis of participatory water management (see Section 5), several security solution for Smart Water domain (see Section 6) and of the standards used in the Hydroinformatics domain (see Section 7) are presented as well.

The survey is based on the ICT for Water Roadmap (2014 and 2015) documents published by participants to special sessions and Workshops on water management, which describe the challenges that need to be addressed in the ICT for water management sector and the open access initiative of EU (see Section 8).

In the final section, further research directions resulting from the survey are mentioned (see Section 9).

Disclaimer: The survey is a compilation of documents published by the members of the research team, as well as of published research results. The list of cited sources is available at the end of the document.

2 Data

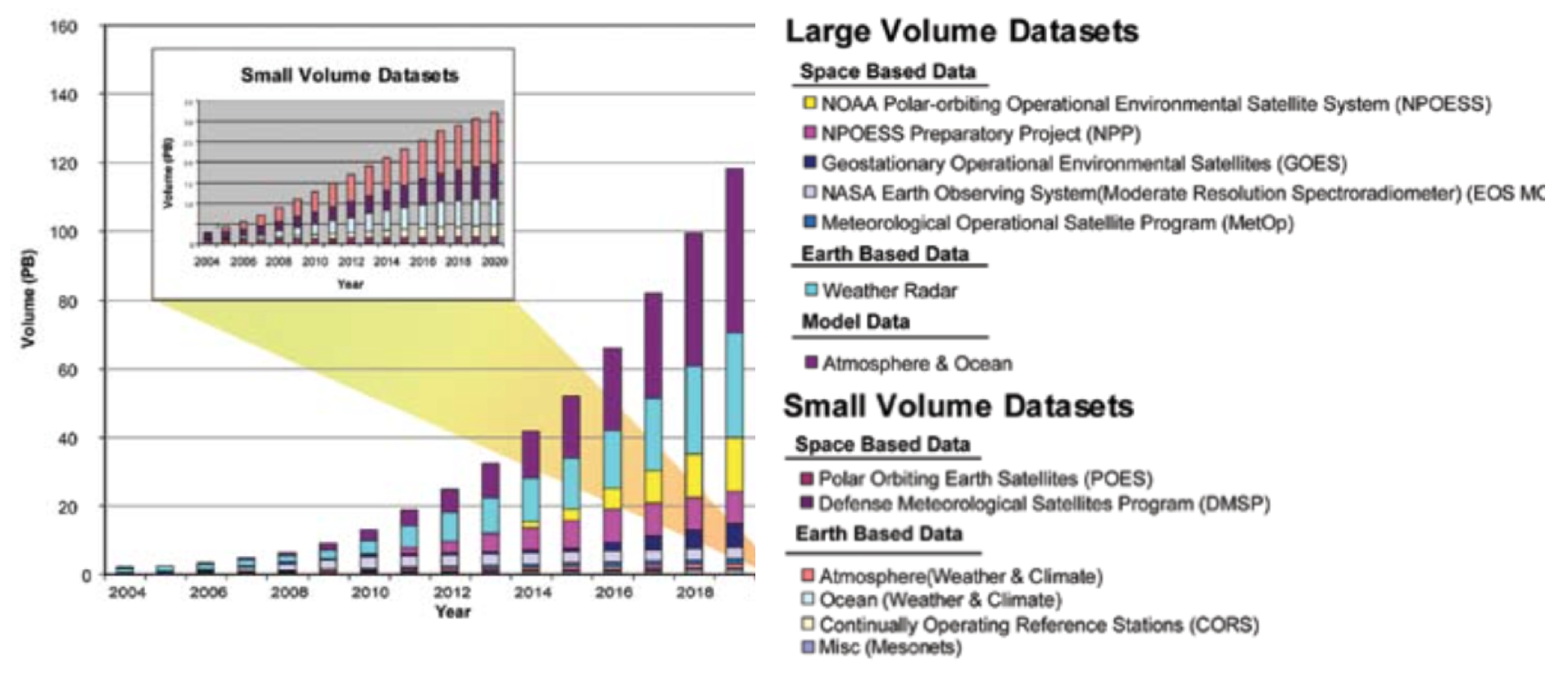

The recent advances in satellite, sensor, automation, networking, and computation capabilities resulted in an ever-increasing avalanche of data and observations about the water systems. Thus we must use these data to build more accurate and integrated representations of these water systems.

Big Data implies large-volume with heterogeneous and diverse dimensionality, complex and growing data sets from multiple sources. With the fast development of solutions for efficient transfer, data collection capacity and storage, Big Data is now rapidly expanding in most of the engineering and science domains, including hydroinformatics (see Section 2.1).

One of the critical areas for management of water resources is hydrometry, a discipline under the hydro-science guiding protocols for data acquisition, processing, and delivering of quantitative estimation of the variables associated with the hydrological cycle (from rainfall to flow in rivers). Recently, the World Meteorological organization identified and implemented a standardized method for conducting uncertainty analysis using rigorous and robust approaches [Muste, 2012] (see Section 2.2).

According to the World Economic Forum [Global Risk, 2015], the International Energy Agency projects water consumption will increase by 85% by 2035 to meet the needs of energy generation and production. Global water requirements are projected to be pushed beyond sustainable water supplies by 40% by 2030. Also, nowadays utilities collect millions of pieces of data each day. This asks for finding new methods and technologies to efficiently process and use this data, and to build more accurate and integrated representations of smart water systems (see Section 2.3).

2.1 Data sources

Water data are permanently collected from a variety of sources. Primary data sources are established water monitoring networks commonly maintained by government agencies responsible for the hydrological, meteorological or geological services. For urban water systems such monitoring networks are maintained by responsible water utilities. Further to these ‘standard’ data sources there is an increased availability of other, more heterogeneous data sources: flood marks, sensors, open data sites and data repositories, smart phones, reports, citizens, etc. Smart phones have important advantages: they are equipped with physical sensors such as pedometers, breath analysers, accelerometers, image analysers, etc.; are attached to humans and can collect information from different locations; are increasingly becoming transmitters of human-sensed data. The field of ICT for water has the challenge of merging these different water data sources in new applications that will deliver additional value to the end users.

Water data refer to different aspects, which depend on the applications’ requirements: water quantity, quality, rainfall, movement of water within the soil, groundwater movement, pollution, etc. In urban water systems, data refer to water pressure, energy consumption, water quality and others.

Data can have different formats; data can be structured (such as those collected from sensors) or unstructured (e.g. textual data received in social networks). Many water data are time series. Data can also be related, meaning that two variables can have a functional relationship.

Water systems (geological units, rivers, lakes, soil distribution, precipitation) are distributed in space. So, water data are related to the spatial context and, implicitly with the geographical information and GIS (Geographical Information Systems). GIS has a broader scope, and covers not only the water “world” but also the transportation, urban planning, and others. GIS data are available from various sources, in different formats and with different sharing policies. These are in fact very similar issues to the water data. To deal with these challenges the GIS community has recently developed standards for sharing spatial data, primarily within the Open Geospatial Consortium (OGC – see Section 6.2). This community also developed the concept of SDI (Spatial Data Infrastructure) that supports easy deployment, discovery and access to geospatial data. These developments are very relevant for the water domain. In fact, other standards for different types of water data (such as WaterML, for sharing time series data, for example) are being developed within OGC. However, while the progress with developing and establishment of new data standards continues, new challenges emerge with respect to the effective usage of the new, heterogeneous data coming from the ‘non-standard’ sources mentioned above.

2.2 Uncertain data

Uncertainty in measurements arises due to randomness and complexity of physical phenomena and errors in observations and/or processing of the measured data. There is also a need for users of one discipline to understand the uncertainty of the data and products from another discipline prior to using them and that the methodology used in uncertainty estimation is consistent. Finally, there is also the benefit to various communities such as the public when seeing the uncertainty expressed for data and products of various disciplines (see Uncertainty analysis).

There are 160 standards related to the scope of UA issued by various ISO technical committees, which can be structured in three types: General UA, Flow Measurement UA, and Specific Flow Measurement UA (see Standards related to the scope of UA).

Uncertainty sources involve the following classes:

- variables that are used (i.e., instruments, protocols, design site and flow characteristics);

- spatial changes in the stream cross section due to the presence of bed forms, vegetation, and ice/debris presence;

- temporal changes in the flow due to backwater, variable channel storage, and, unsteady flows (see Uncertainty sources).

The implementation of the UA assumes that the uncertainties involved are small compared with the measured values, with the exception being when the measurements are close to zero (see Practical Considerations).

Uncertainty analysis [top]

Uncertainty analysis is a rigorous methodology for estimating uncertainties in measurements and in the results calculated from them combining statistical and engineering concepts. The objective of a measurement is to determine the value of a measurand that is the value of the particular quantity to be measured. A measurement for a specified measurand therefore entails the measurement methods and procedures along with the effect of the influence quantities (environmental factors). In general, a measurement has imperfections that give rise to an error in the measurement result. Consequently, the result of a measurement is only an approximation or estimate of the value of the measurand and thus is complete only when accompanied by a statement of the uncertainty of that estimate. In practice, the required specification or definition of the measurand is dictated by the required accuracy of measurement. The accuracy of a measurement indicates the closeness of agreement between the result of a measurement and the value of the measurand.

The measurement error is defined as the result of a measurement minus a true value of the measurand. Neither the true value nor the value of the measurand can ever be known exactly because of the uncertainty arising from various effects.

In typical measurement situations, several physical parameters (e.g., flow velocity, depth, and channel width) are physically measured to obtain a derived quantity (e.g., stream discharge). The individual physical measurements are then used in a data reduction equation (e.g., velocity–area method) to obtain the targeted value. Consequently, the two major steps involved in the uncertainty analysis are:

- identification and estimation of the uncertainties associated with the measurement of the individual variables, and

- propagation of the individual measurement uncertainties in the final result.

While the methods for estimation of the elemental sources of uncertainty are quite similar among various communities (statistical analysis or use of previous experience, expert opinion, and manufacturer specifications), the methods used to determine how those sources of uncertainty are accounted for in the final result have differed widely [TCHME, 2003]. In addition, variations can even occur within a given methodology. Coleman and Steele [Coleman, 1999] discuss six different variations of the Taylor series expansion estimation method (which is the most used uncertainty-estimation approach for the propagation of uncertainties).

Uncertainty analysis is a critical component of the assessment of the performance of the flow measurement and techniques for both the conventional and newer instrumentation and methodologies. These analyses are of fundamental importance to the application of risk management procedures and sustainable water resources management, by ensuring that the methodology and instrumentation selected for a task will deliver the accuracy that is needed. These analyses would also enable investments in hydrological instrumentation in the most cost-effective manner.

Standards related to the scope of UA [top]

Given the vast amount of publications on the topic, a recent overview of the flow measurements standards issued by the International Standards Organization (ISO – the most authoritative institution in the area of standards) lists about 160 standards related to the scope of UA issued by various ISO technical committees, that can be structured in three types of Uncertainty Analysis (UA) publications (i.e., frameworks, standards, guidelines, or references):

- General UA (GUA)

- Flow Measurement UA (FMUA), and

- Specific Flow Measurement UA (SFMUA).

General UA (GUA) approaches

UA was a major concern of scientists and practitioners, as well as of the standardization bodies. In 1986, the efforts of the American Society of Mechanical Engineers (ASME) led to the adoption of the ASME-PTC 19.1 Measurement Uncertainty standard [ASME, 1986], that was recognized also by: the Society of Automotive Engineers (SAE); the American Institute of Aeronautics and Astronautics (AIAA); ISO; the Instrument Society of America – currently the Instrumentation, Systems, and Automation Society (ISA); the US Air Force, and the Joint Army Navy NASA Air Force (JANNAF).

In parallel, due to intense international debates and lack of consensus, in 1978, the problem of unified approach of the uncertainty in measurements was addressed, by the Bureau International des Poids and Mesures (BIPM), at the initiative of the world’s highest authority in metrology, the Comité International des Poids et Mesures (CIPM), and a set of recommendation was elaborated. Eventually, the diverse approaches coagulated by ISO that assembled a joint group of international experts representing seven organizations: BIPM, ISO, International Electrotechnical Commission (IEC), International Federation of Clinical Chemistry (IFCC), International Union of Pure and Applied Chemistry (IUPAC), International Union of Pure and Applied Physics (IUPAP), and International Organization of Legal Metrology (OIML) that prepared the “Guide to Expression of Uncertainty in Measurement” [GUM, 1993], the first set of widely internationally recognized guidelines for the conduct of uncertainty analysis.

GUM provides general rules for the evaluation and expression of uncertainty in measurement rather than providing detailed and specific instructions tailored to any specific field of study. The main distinction between GUM and previous methods is that there is no inherent difference between an uncertainty arising from a random effect and one arising from a correction for a systematic effect (an error is classified as random if it contributes to the scatter of the data; otherwise, it is a systematic error). GUM uses a classification based on how the uncertainties are estimated:

- Type A - evaluated statistically;

- Type B (evaluated by other means).

GUM provides a realistic value of uncertainty based on standard’s methodology fundamental principle that all components of uncertainty are of the same nature and are to be treated identically. GUM / JCGM (100:2008) methodology is recognized today as being the most authoritative framework for a rigorous uncertainty assessment, however, it provides general rules for evaluating and expressing uncertainty in measurement rather than providing detailed, scientific- or engineering-specific instructions. GUM / JCGM (100:2008) does not discuss how the uncertainty of a particular measurement result, once evaluated, may be used for different purposes such as, for example, to draw conclusions about the compatibility of that result with other similar results, to establish tolerance limits in a manufacturing process, or to decide if a certain course of action may be safely undertaken.

Flow Measurement UA (FMUA) and Specific FMUA (SFMUA) approaches

Minimal guidance is available on UA for flow measurements [WMO, 2007]; Pilon et al., 2007). A new edition of the Guide to Hydrological Practices recently published in 2008 [WMO, 2008] reviews new instrumentation and technologies to produce hydrological information but does not address uncertainty analysis aspects of the data and information. Despite the many authoritative documents on flow measurement that were available (e.g., [ASME, 1971]), the first effort at developing a national standard for flow measurement in the U.S.A. was initiated in 1973 [Abernethy, 1985]. The first standard on flow measurement developed by ISO was “Measurement Flow by Means of Orifice Plates and Nozzles” [ISO, 1967] and is based on compromises between USA procedures and those in use throughout Western Europe. All of these efforts addressed the accuracy of flow measurement with various degrees of profundity. However, each of the resulting publications reported “personalized” procedures for estimating the uncertainty and was often biased by the judgment of the individuals involved in the development of procedure development [Abernethy, 1985].

Because of the diversity and large number of available standards on flow measurements (there are 64 ISO standards), guidance on the different types of standards (how they can be used and when), the decision process for implementation of standards, the various key access points for information about the standards and their availability is necessary. ISO/TR 8363 [ISO, 1997] is recommended as being the “standard of the standards” for flow measurements as it gives the most qualified guidance on the selection of an open channel flow measurement method and in the selection of an applicable standard. The first criterion that the ISO (1997) uses to select a specific flow measurement instrument or technique is the required or expected level of uncertainty of the measurement.

Uncertainty sources [top]

The estimation of the uncertainties of the stream-flow estimates at gaging station based on rating curves associated with the HQRC and IVRC methods involves two distinct aspects:

- the estimation of the accuracy of the direct measurements for constructing and subsequently using the RCs, and

- the estimation of the accuracy of the RCs themselves (i.e., regression, extrapolation, shifting).

Similarly, the CSA method is subject to uncertainty from the direct measurements and from the analytical methods and their assumptions.

Using the generic grouping of the sources of uncertainties in gaging methods proposed by [Fread, 1975], we can distinguish the following classes:

- variables that are used (i.e., instruments, protocols, design site and flow characteristics)

- spatial changes in the stream cross section due to the presence of bed forms, vegetation, and ice/debris presence. These changes are typically evolving slower in time (from storm event to season duration) and can be reversible or permanent.

- temporal changes in the flow due to backwater, variable channel storage, and, unsteady flows. Typically, these changes are of the order of hours or days.

Assessment of the individual sources of uncertainties in the three categories above is not available from several reasons:

- there is no comprehensive and widely accepted methodology to conduct uncertainty analysis (UA) for hydrometric measurements at this time. Efforts are made in this community to identify robust standardized methodologies for the assessment of uncertainties for both direct measurements (e.g., [Muste, 2012]) and rating curves (e.g., [Le Coz, 2014]). These efforts are quite extensive as conduct of UA requires specialized experiments similar to the calibrations executed by manufacturers for industrial flow meters. Obviously that these calibrations are much more difficult to conduct in field conditions.

- the level of uncertainty in the HQRC, IVRC, and CSA method estimates induced by site conditions and changes in the flow status are un-known. The situation is especially critical for high flows (e.g., floods) as these events are not frequent and the preparation to acquire measurements are more involved than in steady flows.

Despite the challenge and high cost, these efforts are currently increased as the demand for data quality is also increasing [Le Coz, 2015], [Muste, 2015].

The same method generates different results, depending on the evolution of physical related phenomena. Thus for steady flows, the analysis HQRC vs. IVRC, the findings presented in [Muste, 2015] might suggest that the IVRC estimates are less precise (i.e., show more scattering) than HQRC estimates in steady flows.

Another aspect that distinguishes the IVRC from HQRC is that the former method is sensitive to the change in the flow structure passing through the line of sight.

To compare the performance of HQRC vs. CSA, studies were conducted at the USGS streamgage station 05454220 located on Clear Creek, a small stream located in Iowa, USA. The differences between the two curves are up to 20% for this site (differences are site specific), indicating that the less expensive (no calibration needed) synthetic RC can be used as surrogates when lack of re-sources are of concern for the monitoring agencies. Moreover, the increased availability of affordable radar- or acoustic-based sensors that non-intrusively measure the free surface elevation makes this simplified SA approach attractive for a plethora of applications where this degree of uncertainty is acceptable.

Unsteady flows are ephemeral but unavoidable in natural streams, therefore hysteresis is always present to some degree irrespective of the river size.

It is often stated that for most of the streams the hysteresis effects are small and cannot be distinguished from the uncertainty of the instruments and methods involved in constructing the RCs. On the other hand, theoretical considerations leave no doubt that the use of HQRCs for unsteady flows is associated with hysteresis, however small it may be [Perumal, 2014]. It is only by acquiring direct discharge measurements continuously during the whole extent of the propagation of the flood wave, as it is done in the present study, that it can be demonstrated the magnitude of the hysteresis effect. Fortunately, the advancement and efficiency of the new measurement technologies makes this task increasingly possible.

The non-unicity of the relationships flow variables during unsteady flows were also observed in detailed laboratory experiments conducted by Song and Graf [Song, 1996] where it was shown that during the passage of the hydrograph the mean cross-sectional velocities on the rising limb are larger than on the falling limb for the same flow depth. Unfortunately, this level of detail for the analysis cannot be easily achieved in field conditions.

The experimental evidence [Muste, 2013] suggests that recourse needs to be made to the fundamental equations for the unsteady open channel flow (e.g., Saint-Venant equations) when formulating protocols for IVRC method. The correction protocols would be similar to the corrections applied for the HQRC protocols used in unsteady flows. Another alternative for enhancing the performance of IVRC for unsteady flow would be to use the segmentation approach described by Ruhl and Simpson [Ruhl, 2005] in the construction of the curve for unsteady flows.

The comparison of the CSA vs HQRC [Muste, 2015] allows to note that on the rising limbs of the CSA method, the high flows occur faster and are larger than those predicted by the HQRC method. These findings are consistent with previous laboratory and field measurements (e.g., [Song, 1996]; [Perumal, 2004]; [Gunawan, 2010] and have practical implications for both flood and stream transport processes.

The main conclusions on the performance of the conventional methods in observing steady flows [Muste, 2015] is that the HQRC method is more robust and less sensitive to the changes in flow structures (produced by imperfections in the gaging site selection and ephemeral changes in the flow distribution) compared to IVRC and simplified CSA methods. In contrast, the HQRC performs poorer than the other methods in unsteady flows as the typical construction protocol for RCs is based on steady flow assumptions.

Many distributed systems use the event-driven approach in support of monitoring and reactive applications. Examples include: supply chain management, transaction cost analysis, baggage management, traffic monitoring, environment monitoring, ambient intelligence and smart homes, threat / intrusion detection, and so forth.

Events can be primitive, which are atomic and occur at one point in time, or composite, which include several primitive events that occur over a time interval and have a specific pattern. A composite event has an initiator (primitive event that starts a composite event) and a terminator (primitive event that completes the composite event). The occurrence time can be that of the terminator (point-based semantics) or can be represented as a pair of times, one for the initiator event, and the other for the terminator event [Paschke, 2008, Dasgupta 2009]. The interval temporal logic [Allen, 1994] is used for deriving the semantics of interval based events when combining them by specific operators in a composite event structure.

Event streams are time-ordered sequences of events, usually append-only (events cannot be removed from a sequence). An event stream may be bounded by a time interval or by another conceptual dimension (content, space, source, certainty) or be open-ended and unbounded. Event stream processing handles multiple streams aiming at identifying the meaningful events and deriving relevant information from them. This is achieved by means of detecting complex event patterns, event correlation and abstraction, event hierarchies, and relationships between events such as causality, membership, and timing. So, event stream processing is focused on high speed querying of data in streams of events and applying transformations to the event data. Processing a stream of events in their order of arrival has some advantages: algorithms increase the system throughput since they process the events “on the fly”; more specific they process the events in the stream when they occur and send the results immediately to the next computation step. The main applications benefiting from event streams are algorithmic trading in financial services, RFID event processing applications, fraud detection, process monitoring, and location-based services in telecommunications.

Temporal and causal dependencies between events must be captured by specification languages and treated by event processors. The expressivity of the specification should handle different application types with various complexities, being able to capture common use patterns. Moreover, the system should allow complete process specification without imposing any limiting assumptions about the concrete event process architecture, requiring a certain abstraction of the modelling process. The pattern of the interesting events may change during execution; hence the event processing should allow and capture these changes through a dynamic behaviour. The usability of the specification language should be coupled with an efficient implementation in terms of runtime performance: near real-time detection and non-intrusiveness [Mühl, 2006]. Distributed implementations for the events detectors and processors often achieve these goals. We observe that, by distributing the composite event detection, the scalability is also achieved by decomposing complex event subscriptions into sub-expressions and detecting them at different nodes in the system [Anicic, 2009]. We add to these requirements the fault tolerance constraints imposed to the event composition, namely: the correct execution in the presence of failures or exceptions should be guaranteed based on formal semantics. One can notice that not all these requirements can be satisfied simultaneously: while a very expressive composite event service may not result in an efficient or usable system, a very efficient implementation of composite event detectors may lead to systems with low expressiveness. In this chapter, we describe the existing solutions that attempt to balance these trade-offs.

Composite events can be described as hierarchical combinations of events that are associated with the leaves of a tree and are combines by operators (specific to event algebra) that reside in the other nodes. Another approach is continuous queries, which consists in applying queries to streams of incoming data [Chandrasekaran, 2002]. A derived event is generated from other events and is frequently enriched with data from other sources. Event representation must completely describe the event in order to make this information usable to potential consumers without need to go back to the source to find other information related to the event.

Many event processing engines are built around the Event, Condition, Action (ECA) paradigm [Chakravarthy, 2007], which was firstly used in Data Base Management Systems (DBMS) and was then extended to many other categories of system. These elements are described as a rule that has three parts: the event that triggers the rule invocation; the condition that restricts the performance of the action; and the action executed as a consequence of the event occurrence. To fit this model, the event processing engine includes components for complex event detection, condition evaluation, and rule management. In this model, the event processing means detecting complex events from primitive events that have occurred, evaluating the relevant context in which the events occurred, and triggering some actions if the evaluation result satisfies the specified condition. Event detection uses an event graph, which is a merge of several event trees [Chakravarthy, 1994]. Each tree corresponds to the expression that describes a composite event. A leaf node corresponds to a primitive event while intermediate nodes represent composite events. The event detection graph is obtained by merging common sub-graphs. When a primitive event occurs, it is sent to its corresponding leaf node, which propagates it to its parents. When a composite event is detected, the associated condition is submitted for evaluation. The context, which can have different characteristics (e.g. temporal, spatial, state, and semantic) is preserved in variables and can be used not only for condition evaluation but also in action performance.

Studies emphasize the strong dependence on the test location. The findings are in agreement with theoretical considerations and consistent with a handful of previous studies of similar nature. The existing studies point out that there is a need for initiating a systematic effort to evaluate the effect of flow unsteadiness on various types of RCs used at gages located in medium and small streams. Fortunately, this task is considerable eased nowadays by the availability of the new generation of non-intrusive (i.e., optical and image based) instruments that can be used to programmatically target monitoring of flood events throughout their duration.

Practical Considerations [top]

The implementation of the UA assumes that the uncertainties involved are small compared with the measured values, with the exception being when the measurements are close to zero. For this to be true, the following have to be carefully made [AIAA, 1995]; [GUM, 1993]:

- the measurement process is understood, critically analysed, and well defined

- the measurement system and process are controlled

- all appropriate calibration corrections have been applied

- the measurement objectives are specified

- the instrument package and data reduction procedures are defined

uncertainties quoted in the analysis of a measurement are obtained under full intellectual honesty and professional skills.

If all of the quantities on which the result of a measurement depends are varied, its uncertainty can be evaluated by statistical means (Type A evaluation method). However, because this is rarely possible in practice due to limited time and resources, the uncertainty of a measurement result is usually evaluated using a mathematical model of the measurement and the law of propagation of uncertainty. Thus implicit in GUM / JCGM (100:2008) is the assumption that a measurement can be modelled mathematically to the degree imposed by the required accuracy of the measurement. Because the mathematical model may be incomplete, all relevant quantities should be varied to the fullest practical extent so that the evaluation of the uncertainty can be based as much as possible on observed data.

The implementation of the Guide assumes that the result of a measurement has been corrected for all recognized significant systematic effects and that every effort has been made to identify such effects. In some case, the uncertainty of a correction for a systematic effect need not be included in the evaluation of the uncertainty of a measurement result. Although the uncertainty has been evaluated, it may be ignored if its contribution to the combined standard uncertainty of the measurement result is insignificant. In order to decide if a measurement system is functioning properly, the experimentally observed variability of its output values, as measured by their observed standard deviation (end-to-end approach in the AIAA, 1005 terminology), is often compared with the predicted standard deviation obtained by combining the various uncertainty components that characterize the measurement. In such cases, only those components (whether obtained from Type A or Type B evaluations) that could contribute to the experimentally observed variability of these output values should be considered.

It is recommended that a preliminary uncertainty analysis be done before measurements are taken. This procedure allows corrective action to be taken prior to acquiring measurements to reduce uncertainties. The pre-test uncertainty analysis is based on data and information that exist before the test, such as calibration, histories, previous tests with similar instrumentation, prior measurement uncertainty analysis, expert opinions, and, if necessary, special tests. Pre-test analysis determines if the measurement result can be measured with sufficient accuracy, to compare alternative instrumentation and experimental procedures, and to determine corrective actions. Corrective action resulting from pre-test analysis may include:

- improvements to instrument calibrations if systematic uncertainties are unacceptable

- selection of a different measurement methods to obtain the parameter of interest

- repeated testing and/or increased sample sizes if uncertainties are unacceptable

Cost and time may dictate the choice of the corrective actions. If corrective actions cannot be taken, there may be a high risk that test objectives will not be met because of the large uncertainty interval, and cancellation of the test should be a consideration. Post-test analysis validates the pre-test analysis, provides data for validity checks, and provides a statistical basis for comparing test results.

2.3 ICT support and technologies for data management

A large variety of heterogeneous physical devices are used to collect water related data. Their connection with other components of the hydroinformatics platforms uses several technologies, the most recent one being the Internet of Things. This approach ensures the integration of data sources in the Internet by associating a unique identifier with each device and a semantic communication with other Internet components. IoT is already used in water related applications (see The role of IoT in sensing and communication).

Usually, data are received in real-time from the source and are most valuable at the time of arrival. This is why the analytics that process in real time large-scale stream data are used in many water related applications (see Streaming data analytics).

Pre-processing is essential in the data pipeline system because it takes an input of uncertain, missing or error-prone data and transforms it into reliable information. This can be done to filter and eliminate useless data or simply to reduce the overall amount of data that needs to be analysed. The most important aspect of data cleaning refers to the cleaning of time-series. This data is usually produced by sensors, which monitor different parameters of water flow or water consumption. Regression models exploit continuity and correlations between different sampling processes. The dependency of one variable (e.g. time) is computed to another (sensor values) in order to build a regression curve, which is then used as the standard (see Data cleaning).

Today the Internet represents a big space where great amounts of information are added every day. Large datasets of information is indisputable being amassed as a result of our social, mobile, and digital world. We are not far from the time when terms like PetaByte, ExaByte, and ZettaByte will be quite common. However, in the Big Data era, storing huge amounts data is not the biggest challenge anymore. Today researchers struggle with designing solutions to understand the Big amount of Data available. Efficient parallel/concurrent algorithms and implementation techniques are the key to meeting the scalability and performance requirements entailed by scientific data analyses. Challenges such as scalability and resilience to failure are already being addressed at the infrastructure layer. Applications need fast movement and operations on that data, not to mention support to cope with an incredible diversity of data. Big data issues also emerge from extensive data sharing, allowing multiple users to explore or analyses the same data set. All these demand a new movement - and a new set of complementary technologies. Big Data is the new way to process and analyse existing data and new data sources (see Processing, storing, and sharing large amount of data).

Up until recently Google, Amazon and Microsoft were the main actors capable to handle big data, but today new actors enter the Big Data stage. At the forefront, Google uses one of the largest dataset to discover interesting aspects to improve their services. MapReduce, their novel computing model, provoked the scientists to rethink how large-scale data operations should be handled (see MapReduce programing model).

Later on, the MapReduce community is migrating high-level languages on top of the current interface to move such functionality into the run time (see Other solutions related to MapReduce).

Sharing the data is another challenge for Big Data applications, besides processing. With all the BigData tools and instruments available, we are still far from understanding all the complexities behind processing large amounts of data (see Sharing the data).

The role of IoT in sensing and communication [top]

Internet of Things (IoT) is a network connecting together diverse physical devices, from sensors, to actuators, vehicles and even buildings. It proposes new methods to connect and access information using device2device communication protocols, developing paradigms such as smart objects, and the addressing to devices over Web services. More recently, IoT was introduced as an infrastructure for collecting data from the most diverse kind of devices in water monitoring applications, and dealing with the analyse of information over specialized IoT platforms such as InfluxData. Several examples of applications of IoT in water management stand up:

- Smart irrigation with IoT: Smart irrigation replaces existing irrigation controllers (which are just simple timers), with cloud enabled smart irrigation controllers that apply water based on plant need (i.e., type of crop) and weather. Moreover, with flow sensors and real-time alerts, property managers and landscape contractors can be alerted the second something goes awry, which if your site has any significant landscape at all, you know this can happen quite frequently. Examples of such systems: HydroPoint’s WeatherTRAK® smart irrigation system.

- Smart water meters with IoT: A smart water meter (device) can collect usage data and communicate it wirelessly to the water utility company, where analytics software reports the results on a web site to view. Examples of such systems: One of the largest pilot programs of smart meters and related water management software platforms (a smart water management network) is in San Francisco. Water consumption is measured hourly and data is transmitted on a wireless basis to the utility four times a day. Both the utility and customers can track use. A pilot program in the East Bay Municipal Water District, which targets mostly single-family homes, provides a daily update of hour-by-hour consumption via a website. Consumers can be alerted, for example, by email or phone call, when water use exceeds a specified limit or when a meter indicates continuous running water for 24 hours. A customer can further view the data as it comes in, as well as compare their numbers with past use and city averages. The usage data should eventually result in alerts for leaks (by comparing how the readings in consecutive water meters).

Determining water demand in a city: One of the crucial challenges of water management as well as conservation in a city is to determine the amount of water that any particular city is going to utilize during the next day. This can be calculated to precision with the use of predictive analytics. Recently, IoT was employed for this purpose, where dedicated platforms keep a track on the history of water consumption in the city on any given day. Based on the historical data collected and analysed by predictive analytics and combined with the consideration of special events, holidays, as well as the weather in that city, we can determine the amount of water that the entire population is going to consume in one day. The Internet of Things technology also helps in scheduling the maintenance as well as shutdown of pumps on a regular basis. There are optimization techniques, which can beforehand convey to the residents of a city regarding the unavailability of water during any particular point of time. This helps the water regulation authorities in not only meeting the adequate water demands in a city; rather it also aids in the conservation of resources and energy.

Streaming data analytics [top]

Processing and reasoning must be done in a real-time fashion. Efficient methods for streaming need to be considered as well as robust algorithms that must analyse the data in one pass since it is usually not possible to store the entire history of data. For example, applications that trigger alerts are time-sensitive and the time of response may be significantly influenced by the large number of monitored devices.

When considering Big Data analytics most of the data is received in real-time and is most valuable at the time of arrival. This is the main motivation behind the increased interest in the field of large-scale stream processing. Some of the earliest academic systems to handle streaming-data analytics were Aurora, STREAM, Borealis and Telegraph. They first introduced the concepts of sliding windows and incremental operators.

One of the major innovations of this field is the ability to run continuous queries that produce different results as new data arrives. Some of the major research challenges include fault tolerance.

There have been a number of recent systems that enable streaming processing with the use of high-level APIs.

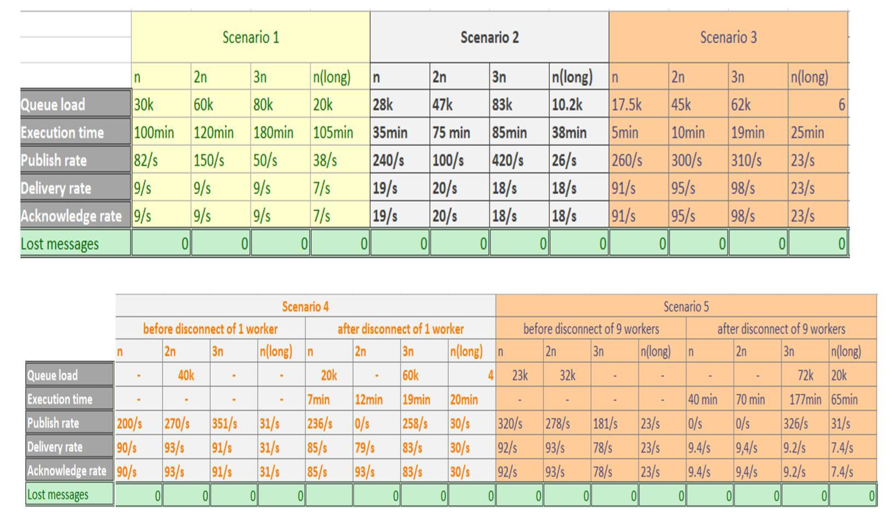

Table 1. Comparison of big data messaging systems.

|

System |

Characteristics |

Drawbacks |

|

TimeStream [Qian, 2013] |

|

|

|

MillWheel [Akidau, 2013] |

|

|

|

MapReduce Online [Condie, 2010] |

|

|

|

Meteor Shower [Wang, 2012] |

|

|

|

iMR [Lologhetis, 2011] |

|

|

|

Percolator [Peng, 2010] |

|

|

A recent framework Spark has gained a lot of attention due to its different approach and increased performance. Its authors claim in [Zaharia, 2014] that Spark is 20 times faster than Hadoop for iterative applications and can process 1TB in about 5-7 seconds.

The key concept in Spark is represented by RDDs [Zaharia, 2012] (resilient distributed datasets). They consist of a restricted form of shared memory, which is based on coarse-grained operations and transformations (e.g. map, filter, join) to the shared state, as opposed to other systems which process fine-grained updates.

By applying the same operations to many data item sets it is possible to log the transformations and compute the lineage for each RDD, instead of the actual data. An RDD has enough lineage information to compute its partitions from stable storage. RDDs can express cluster programming models such as map-reduce, DryadLINQ, Haloop, Pregel or Sql and allow a more efficient fault tolerance than previous systems but are restricted to applications that perform bulk reads and writes.

D-Stream [Zaharia, 2013] (or Spark Streaming) is an add-on to the Spark engine and is based on the idea of treating streaming computations as series of short interval batch computations. Because it is based on RDDs the process is also deterministic, so lost data can be recomputed without replication and in parallel with the active computations. Consistency is ensured by atomically processing each record within the time interval in which it arrives. Spark streaming inter-operates efficiently with Spark’s batch features. Users can express ad-hoc queries and use the same high level API for processing both historical and streaming data.

One issue with the system is that it does not scale linearly. With many nodes the number of stragglers also increases, which in turn affects the overall performance. Also Spark Streaming supports only the point-to-point pattern compared to other systems, which can operate using broadcast or all-to-one aggregations. There are also other modules and possible improvements that the authors highlight such as enabling asynchrony, version tracking or correctness debugging.

Data cleaning [top]

One of the most common probabilistic models is the Kalman filter, a stochastic and recursive data filtering algorithm which models the value of a sensor as a function of its previous value. Naive Bayes and Markov chains are also proposed in [Elnahrawy, 2003] [Chu, 2005] to identify anomalies. Inferring missing values can also contribute to the quality of processing sensor streams since these values can represent a base for indicating the precision of raw sensor values.

Outliers can be detected using statistical approaches (parametric, non-parametric), nearest neighbour or clustering. A detailed taxonomy of outlier detection methods is presented in [Zhang, 2010].

Declarative cleaning refers to the capability of using SQL-like interfaces with complex background cleaning algorithms. One such interface is proposed in [Mayfield, 2010]. Their aim is to hide the complexity of the cleaning process and expose a friendlier interface to non-experts.

Much of the work on compression and filtering is intended for indexing purposes and less for discovering patterns. Such examples include Fourier transformations [Agrawal, 1993] and approximating functions [Chakrabarti, 2002].

For trend discovery wavelet coefficients are used in [Papadimitriou, 2004]. Other work includes finding representative trends [Indyk, 2000] (a sub-sequence of the time series with the smallest sum of distances from all other equal sub-sequence), motifs [Chiu, 2003] (frequently repeated subsequence), vector quantization, change detection [Ide, 2005] or compressed sensing (a signal processing technique for efficiently acquiring and reconstructing a signal)

Dimensionality reduction can be split into feature selection (find a subset of variables) and feature extraction (transforms data into a high-dimensional space or fewer dimensions). The most well-known technique for dimensionality reduction is the Principal Component Analysis (PCA). PCA converts a set of observations into of possible correlated variables into a set of sorted principal components (uncorrelated variables). The first component has the largest variance.

Processing, storing, and sharing large amount of data [top]

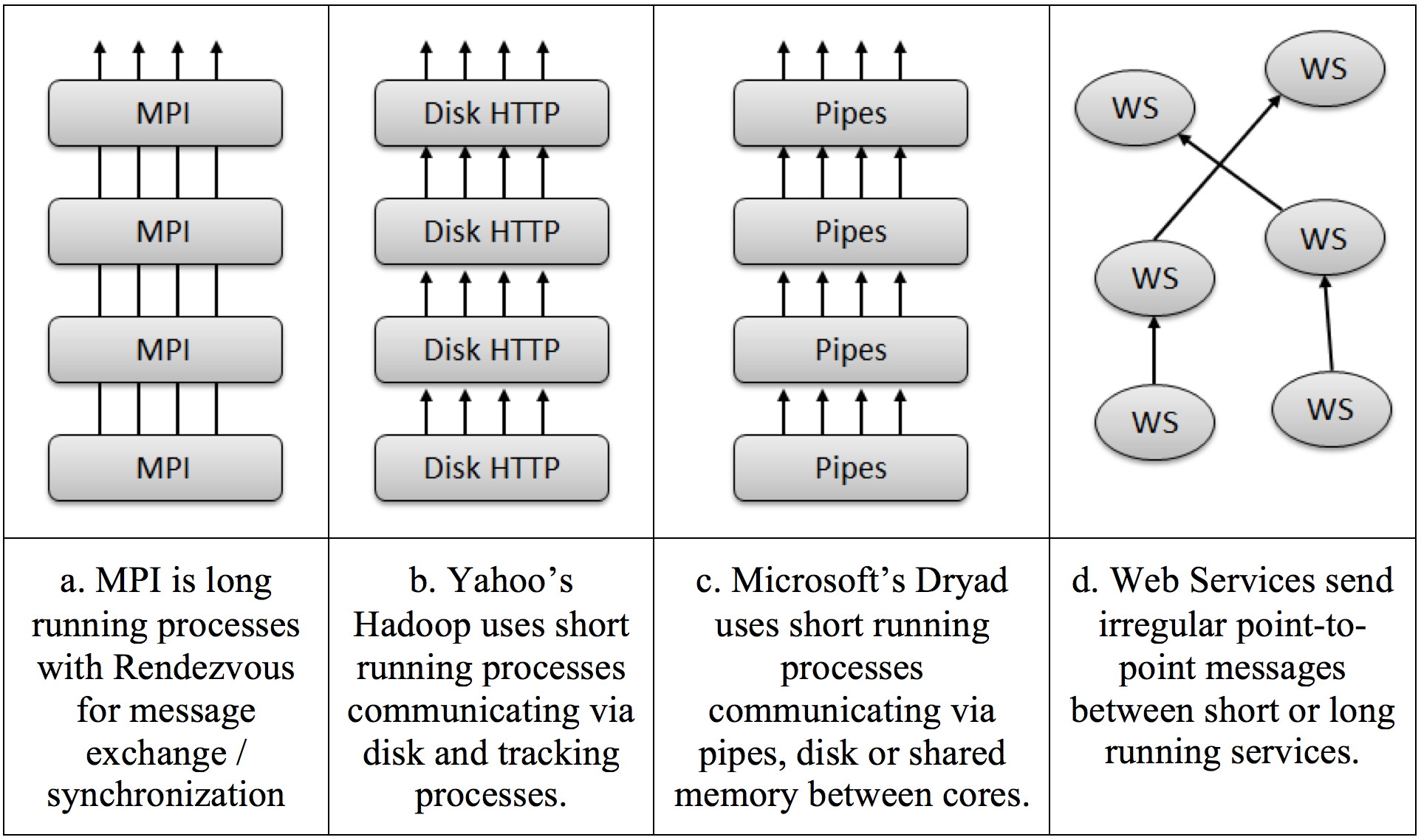

Runtime Environments for Big Data High level languages (i.e., for parallel programming) have been a holy grail for computer science research, but lately researchers made a lot of progress in the area of runtime environments. There is much similarity between parallel and distributed run times, with both supporting messaging with different properties (several such choices are presented in Figure 1, for different hardware and software models). The hardware support of parallelism/concurrency varies from shared memory multicore, closely coupled clusters, and higher-latency (possibly lower bandwidth) distributed systems. The coordination (communication/synchronization) of the different execution units vary from threads (with shared memory on cores), MPI (between cores or nodes of a cluster), workflow or mash-ups linking services together, and the new generation of data intensive programming systems typified by Hadoop (implementing MapReduce) or Dryad.

Figure 1. Combinations of processes/threads and intercommunication mechanisms [Fox, 2010].

Short running threads can be spawned up in the context of persistent data in memory and have modest overhead [Fox, 2010]. Short running processes (i.e., implemented as stateless services) are seen in Dryad and Hadoop. Also, various runtime platforms implement different patterns of operation. In Iteration-based platforms, the results of one stage are iterated many times. This is typical of most MPI style algorithms. In Pipelining-based platforms, the results of one stage (e.g., Map or Reduce operations) are forwarded to another. This is functional parallelism typical of workflow applications.

An important ambiguity in parallel/distributed programming models/runtimes comes from the fact that today both the parallel MPI style parallelism and the distributed Hadoop/Dryad/Web Service/Workflow models are implemented by messaging. This is motivated by the fact that messaging avoids errors seen in shared memory thread synchronization.

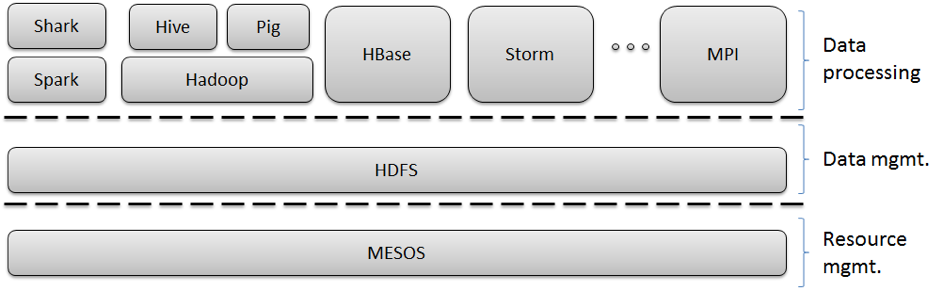

MPI is a perfect example of runtimes crossing different application characteristics. MPI gives excellent performance and ease of programming for MapReduce, as it has elegant support for general reductions. However, it does not have the fault tolerance and flexibility of Hadoop or Dryad. Further MPI is designed for local computing; if the data is stored in a compute node’s memory, that node’s CPU is responsible for computing it. Hadoop and Dryad combine this idea with the notion of taking the computing to the data. A (non-comprehensive) presentation of technologies in use today for Big Data processing is presented in Figure 2.

Figure 2. Example of an ecosystem of Big Data analysis tools and frameworks.

MapReduce programing model [top]

MapReduce (MR) emerged as an important programming model for large-scale data-parallel applications [Dean, 2008]. The MapReduce model popularized by Google is attractive for ad-hoc parallel processing of arbitrary data, and is today seen as an important programming model for large-scale data-parallel applications such as web indexing, data mining and scientific simulations, as it provides a simple model through which users can express relatively sophisticated distributed programs.

MapReduce breaks a computation into small tasks that run in parallel on multiple machines, and scales easily to very large clusters of inexpensive commodity computers. A MR program consists only of two functions, called Map and Reduce, written by a user to process key/value data pairs. The input data set is stored in a collection of partitions in a distributed file system deployed on each node in the cluster. The program is then injected into a distributed processing framework and executed in a manner to be described.

A key benefit of Map Reduce is that it automatically handles failures, hiding the complexity of fault-tolerance from the programmer. If a node crashes, MapReduce automatically reruns its tasks on a different machine. Similarly, if a node is available but is performing poorly, a condition called a straggler, MapReduce runs a speculative copy of its task (also called a ``backup task’’) on another machine to finish the computation faster. Without this mechanism (known as ``speculative execution’’ – not to be confused still with speculative execution at the OS or hardware level for branch prediction), a job would be as slow as the misbehaving task. In fact, Google has noted that in their implementation speculative execution can improve job response times by 44% [Dean, 2008].

Google's MapReduce implementation is coupled with a distributed file system named Google File System (GFS) [Ghemawat, 2012] from where it reads the data for MapReduce computations, and in the end stores the results. According to J. Dean et al., in their MapReduce implementation [Dean, 2008], the intermediate data are first written to the local files and then accessed by the reduce tasks.

The popular open-source implementation of MapReduce, Hadoop [Zaharia, 2008], is developed primarily by Yahoo, where it runs jobs that produce hundreds of terabytes of data. Today Hadoop is used at Facebook, Amazon, etc. Researchers are using Hadoop for short tasks where low response time is critical: seismic simulations, natural language processing, mining web data, and many others. Hadoop includes several specific components, such as its own file system, HDFS. In HDFS, data is spread across the cluster (keeping multiple copies of it in case of hardware failures). The code is deployed in Hadoop to the machine that contains the data upon which it intends to operate on. HDFS organizes data by keys and values; each piece of data has a unique key and a value associated with that key. Relationships between keys can be defined only within the MapReduce application.

Other solutions related to MapReduce [top]

Later on, mostly to alleviate the burden of having to re-implement repetitive tasks, the MapReduce community is migrating high-level languages on top of the current interface to move such functionality into the run time. Pig [Olston and Hive [Thusoo, 2010] are two notable projects in this direction. Such domain-specific languages, developed on top of the MapReduce model to hide some of the complexity from the programmer, today offer a limited hybridization of declarative and imperative programs and generalize SQL’s stored-procedure model. Some whole-query optimizations are automatically applied by these systems across MapReduce computation boundaries. However, these approaches adopt simple custom type systems and prove limited support for iterative computations.

An alternative tool on top of Hadoop is being developed by Facebook. Hive lets analysts crunch data atop Hadoop using something very similar to the structured query language (SQL) that has been widely used since the 80s. It is based on concepts such as tables, columns and partitions, providing a high-level query tool for accessing data from their existing Hadoop warehouses [Thusoo, 2010]. The result is a data warehouse layer built on top of Hadoop that allows for querying and managing structured data using a familiar SQL-like query language, HiveQL, and optional custom MapReduce scripts that may be plugged into queries. Hive converts HiveQL transformations to a series of MapReduce jobs and HDFS operations and applies several optimizations during the compilation process.

The Hive data model is organized into tables, partitions and buckets. The tables are similar to RDBMS tables and each corresponds to an HDFS directory. Each table can be divided into partitions that correspond to sub-directories within an HDFS table directory and each partition can be further divided into buckets, which are stored as files within the HDFS directories.

It is important to note that Hive was designed for scalability, extensibility, and batch job handling, not for low latency performance or real-time queries. Hive query response times for even the smallest jobs can be of the order of several minutes and for larger jobs, may be on the order of several hours. Also, today Hive is the Facebook’s primary tool for analyzing the performance of online ads, among other things.

Pig, on the other hand, is a high-level data-flow language (Pig Latin) and execution framework whose compiler produces sequences of Map/Reduce programs for execution within Hadoop [Olston, 2008]. Pig is designed for batch processing of data. It offers SQL-style high-level data manipulation constructs, which can be assembled in an explicit dataflow and interleaved with custom Map- and Reduce-style functions or executables. Pig programs are compiled into sequences of Map-Reduce jobs, and executed in the Hadoop Map-Reduce environment.

Pig’s infrastructure layer consists of a compiler that turns (relatively short) Pig Latin programs into sequences of MapReduce programs. Pig is a Java client-side application, and users install locally – nothing is altered on the Hadoop cluster itself. Grunt is the Pig interactive shell. With the support of this infrastructure, among the important advantages of Pig we mention the optimized data reading performance, the semi-structured data, and modular design. However, several limitations should not be ignored, such as the large amount of boiler-plate Java code (although proportionally less than Hadoop), the effort for learning how to use Pig and the lack of debugging techniques.

Spark is a framework that supports such applications while retaining the scalability and fault tolerance of MapReduce [Zaharia, 2010]. Spark provides two main abstractions for parallel programming: resilient distributed datasets and parallel operations on these datasets (invoked by passing a function to apply on a dataset).

Resilient distributed datasets (RDDs) are read-only collections of objects partitioned across a set of machines that can be rebuilt if a partition is lost. Users can explicitly cache an RDD in memory across machines and reuse it in multiple MapReduce-like parallel operations {zaharia2012resilient}. RDDs achieve fault tolerance through a notion of lineage: if a partition of an RDD is lost, the RDD has enough information about how it was derived from other RDDs to be able to rebuild just that partition.

Spark is implemented in Scala, a statically typed high-level programming language for the Java VM, and exposes a functional programming interface similar to DryadLINQ. Spark can also be used interactively, and allows the user to define RDDs, functions, variables and classes and use them in parallel operations on a cluster. According to experiments [Zaharia, 2010], by making use extensively of memory storage (using the RDD abstractions) of cluster nodes, most of the operations Spark can outperform Hadoop by a factor of ten in iterative machine learning jobs, and can be used to interactively query a large dataset with sub-second response time.

Twister is another MapReduce extension, designed to support iterative MapReduce computations efficiently [Ekanayake. Twister uses a publish/subscribe messaging infrastructure for communication and data transfers, and supports long running map/reduce tasks, which can be used in “configure once and use many times” approach. In addition, it provides programming extensions to MapReduce with “broadcast” and “scatter” type data transfers. It also allows long-lived map tasks to keep static data in memory between jobs in a manner of “configure once, and run many times”. Such improvements allow Twister to support iterative MapReduce computations highly efficiently compared to other MapReduce runtimes.

Dryad is a general-purpose distributed execution engine for coarse-grain data-parallel applications [Isard, 2007]. While MapReduce was designed to be accessible to the widest possible class of developers (aiming for simplicity at the expense of generality and performance), the Dryad system allows the developer fine control over the communication graph as well as the subroutines that live at its vertices. A Dryad application developer can specify an arbitrary directed acyclic graph to describe the application’s communication patterns, and express the data transport mechanisms (files, TCP pipes, and shared-memory FIFOs) between the computation vertices.

Dryad runs the application by executing the vertices of this graph on a set of available computers, communicating as appropriate through files, TCP pipes, and shared-memory FIFOs. The vertices provided by the application developer are quite simple and are usually written as sequential programs with no thread creation or locking. Concurrency arises from Dryad scheduling vertices to run simultaneously on multiple computers, or on multiple CPU cores within a computer. The application can discover the size and placement of data at run time, and modify the graph as the computation progresses to make efficient use of the available resources.

Dryad is designed to scale from powerful multi-core single computers, through small clusters of computers, to data centers with thousands of computers. The Dryad execution engine handles all the difficult problems of creating a large distributed, concurrent application: scheduling the use of computers and their CPUs, recovering from communication or computer failures, and transporting data between vertices.

DryadLINQ is a system and a set of language extensions that enable a programming model for large scale distributed computing [Yu, 2008]. It generalizes execution environments such as SQL, MapReduce, and Dryad in two ways: by adopting an expressive data model of strongly typed .NET objects; and by supporting general-purpose imperative and declarative operations on datasets within a traditional high-level programming language. A DryadLINQ application is a sequential program (hence, the programmer is given the “illusion” of writing for a single computer), composed of LINQ (Language Integrated Query) expressions performing imperative or declarative operations and transformations on datasets, and can be written and debugged using standard .NET development tools. Objects in DryadLINQ datasets can be of any .NET type, making it easy to compute with data such as image patches, vectors, and matrices. DryadLINQ programs can use traditional structuring constructs such as functions, modules, and libraries, and express iteration using standard loops. Crucially, the distributed execution layer employs a fully functional, declarative description of the data-parallel component of the computation, which enables sophisticated rewritings and optimizations like those traditionally employed by parallel databases. The DryadLINQ system automatically and transparently translates the data-parallel portions of the program into a distributed execution plan, which is passed to the Dryad execution platform, which further ensures efficient, reliable execution of this plan.

Sharing the data [top]

Sharing the data is another challenge for Big Data applications, besides processing. With all the BigData tools and instruments available, we are still far from understanding all the complexities behind processing large amounts of data. Recent projects such as BigQuery have the potential to encourage scientists to put their data into the Cloud, where potentially others might have access as well. BigQuery is a tool developed by Google to allow ordinary users run ad hoc queries using an SQL-like syntax. Google had used previously the tool (under the name Dremel) internally for years before releasing a form of it in their generally available service - BigQuery - capable to get results in seconds from terabytes of data [Vrbic, 2012]. The tool is hosted on Google's infrastructure. Its main advantage is simplicity: compared to Hadoop, which requires set up and administration, companies can take their data, put it in Google's cloud, and use it directly into their applications.

Similarly, Facebook is building Prism [Roush, 2013], a platform currently rolling out across the Facebook infrastructure. The typical Hadoop cluster is governed by a single “namespace” and a list of computing resources available for each job. In opposition, Prism carves out multiple namespaces, creating many “logical clusters” that operate atop the same physical cluster. Such names spaces can then be divided across various Facebook teams, and all of them would still have access to a common dataset that can span multiple data centers.

Nexus is a low-level substrate that provides isolation and efficient resource sharing across frameworks running on the same cluster, while giving each framework freedom to implement its own programming model and fully control the execution of its jobs [Hindman, 2009]. As new programming models and new frameworks emerge, they will need to share computing resources and data sets. For example, a company using Hadoop should not have to build a second cluster and copy data into it to run a Dryad job. Sharing resources between frameworks is difficult today because frameworks perform both job execution management and resource management. For example, Hadoop acts like a “cluster OS” that allocates resources among users in addition to running jobs. To enable diverse frameworks to coexist, Nexus decouples job execution management from resource management by providing a simple resource management layer over which frameworks like Hadoop and Dryad can run.

Mesos is a thin resource sharing layer that enables fine-grained sharing across diverse cluster computing frameworks, by giving frameworks such as Hadoop or Dryad a common interface for accessing cluster resources [Hindman. To support a scalable and efficient sharing system for a wide array of processing frameworks, Mesos delegates control over scheduling to the framework themselves. This is accomplished through an abstraction called a “resource offer”, which encapsulates a bundle of resources that a framework can allocate on a cluster node to run tasks. Mesos decides how many resources to offer each framework, based on an organizational policy such as fair sharing, while frameworks decide which resources to accept and which tasks to run on them. While this decentralized scheduling model may not always lead to globally optimal scheduling, in practice its developers found that it performs surprisingly well in practice, allowing frameworks to meet goals such as data locality nearly perfectly [Hindman, 2011]. In addition, resource offers are simple and efficient to implement, allowing Mesos to be highly scalable and robust to failures.

3 Water Models

Water is an important resource in many of the human activities. Thus, water management should include an integrated view of several distinct systems from different domains: environment, agriculture, industry, etc. As a result, there are many complex interactions between different factors, some of them not immediately apparent. In this context there is an imminent need for complex modelling solutions for water-based systems.

A model is a simplified, schematic representation of the real world. Models are meant to help engineers, scientists and decision–makers to determine what is happening in reality and to predict what may happen in the future. In particular, they are useful for the assessment of the impact of human activities on the environment or on artificial systems.

A classical definition of the model is “a simplification of reality over some time period or spatial extent, intended to promote understanding of the real system” [Bellinger, 2006] or “A model is a simplification of reality that retains enough aspects of the original system to make if useful to the modeller” [Eykhoff, 1974]. In this context the system is defined to be a part of reality (isolated from the rest), which consists of entities that are in mutual relationships (processes) and have limited interactions with the reality outside of the system.

A model is a physical or mathematical description of a physical system including the interaction with the outside surrounding environment, which can be used to simulate the effect of changes in the system itself or the effect of changes due to conditions imposed on the system.

The selection of the appropriate model together with the associated parameters is an important element in modelling water related problems. [K.W. Chau, 2007]

Nowadays modelling solutions are used intensively in hydroinformatics. There are two main paradigms in modelling aquatic environment physically-based modelling and data-driven modelling [Donald K., 2005].

Physically based distributed modelling, uses a description of the physical phenomenon which govern the behaviour of water in the system under study. The principles that are applied are mass conservation and additional laws describing the driving forces.

Results of modelling depends on the level of knowledge being encapsulated within the software package.

Physically based models (see Section 3.1) are considered to be deterministic when they provide a unique output to a given input. The main advantage of such approach is that it can be applied to a wide range of input data after the initial testing and calibration of the model has been carried out. One of the disadvantages of this approach is that they can generate a large amount of information, since they require small steps of computation both in space and time.

Different solutions of modelling software tools (see Section 3.1, Available software tools) led to improvements in the understanding of large-scale water-based systems, such as rivers or coastal waters. Many of these solutions have been extended to include external influence factors such as advection and dispersion of pollutants in the flow, the transport of sediment in suspension or other similar examples.

The second modelling approach is data-driven for which the main principle is to connects one set of output data with the corresponding input set. Such a model can only work if enough observed (measured data) are available. The model is based on finding different correlations between data sets in order to determine the best input-output pair.

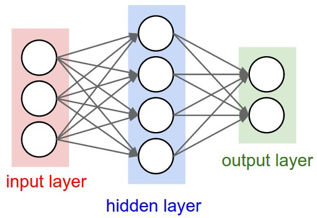

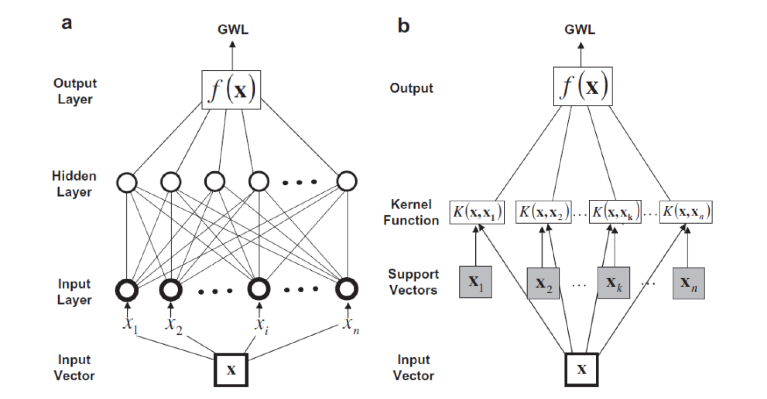

There are several data-driven modelling techniques, such as (see Section 3.2): Neural Networks, Nearest neighbour model, Genetic algorithms model, Fuzzy rule based system model, Decision/model tree model, and Support vector machine model.

3.1 Physically-based modelling

As briefly mentioned in the introduction, physically-based models are those that solve differential equations that represent different physical processes of water motion by numerical approximation in space and/or time. Examples of processes where such approaches are applied are: water quantity and quality, flood routing, rainfall-runoff and groundwater flow.

A common distinction between different kinds of physically based models is with respect to the number of spatial dimensions used for the mathematical representation of the modelled physical processes. Therefore models are: zero-dimensional (or lumped conceptual) where all spatial dimensions are ignored and only temporal variations are considered (treating water system elements as lumped units, without spatial representation); one-dimensional models (1-D) used for example in river systems modelling, where the river is considered as a 1-D spatial element; two-dimensional (2-D, used in flood analysis, or analysis of groundwater systems; and three –dimensional ones (3-D), used for detailed analysis of lake systems, or three-dimensional flow around hydraulic structures.

These different modelling approaches are commonly associated with certain application areas. Hydrological models, for example, frequently use 0-D and simplified versions of 1-D approaches. Similarly, water allocation models (also mainly use 0-D. Models of urban water systems, such as water distribution and drainage networks are commonly using 1-D approaches, while hydraulic models of rivers and floodplains are combining 1-D and 2-D approaches. Detailed analysis of deep lakes is carried out with 3-D models. Water quality modelling can be associated with any of these models, however, the complexity representation of the water quality parameters and their interactions is different for 0-D,1-D, 2-D or 3-D models.

Mathematical representation of the physical processes also depends on the type of flow that is being considered, such as pressurized free surface or groundwater flow.

Nowadays there are many physically based modelling systems available. These are software packages that incorporate generic algorithms for solving particular mathematical equations, applicable for a given application domain. By introducing data for a specific case, such as boundary conditions, parameters, geometry, etc. the modelling system can be used to instantiate a model for a particular situation (i.e. river, catchment, aquifer system, ) depending on the application. The availability of modelling systems has introduced different business models in relation to delivery of water modelling software products and services, which are continuously evolving. Current business model trends are towards deploying instantiated models and even modelling tools on the Internet, which will increase their accessibility and usage for different water management tasks.

Examples of well-known European modelling systems are: MIKE ZERO modelling suite developed by Danish Hydraulic Institute (DHI) from Denmark s; Delft3D and Sobek released by Deltares, from the Netherlands; and Infoworks of Wallingford Software from UK. Elsewhere, like in USAmany modelling systems are developed and maintained by different federal agencies, such as United States Geological Survey ( USGS); US Army Corps of Engineers (USACE), Environmental Protection Agency (EPA), etc.

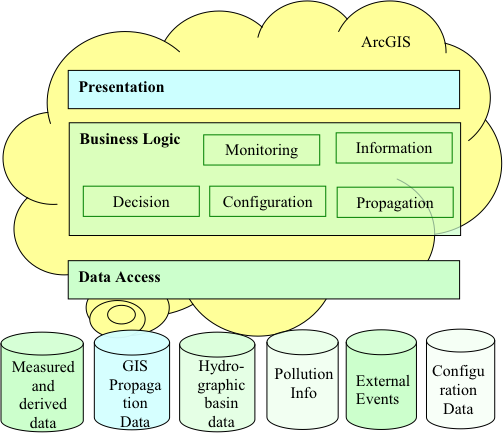

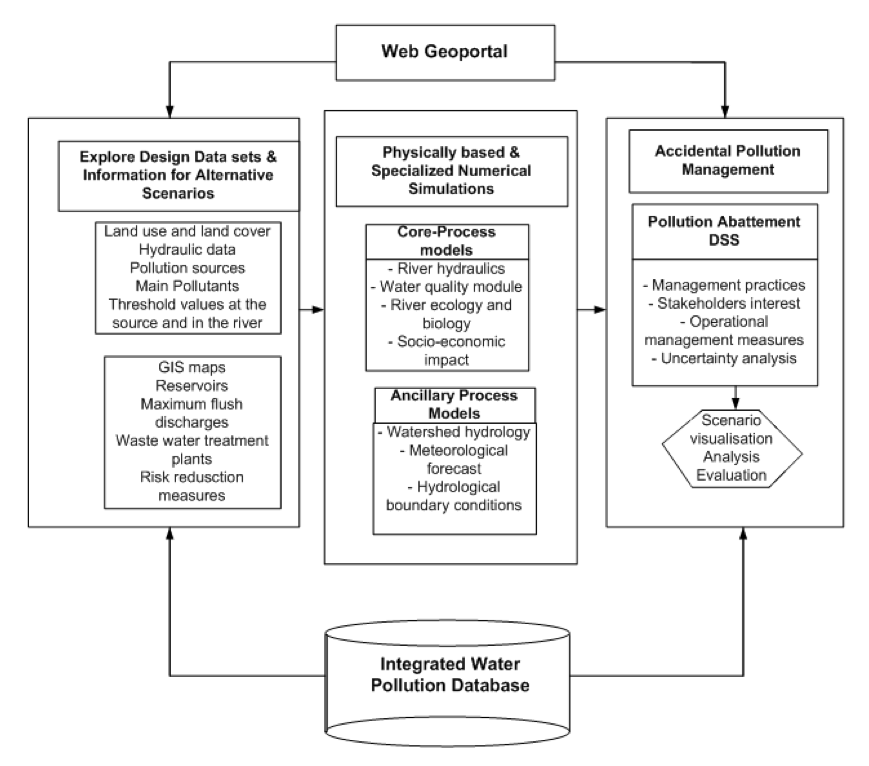

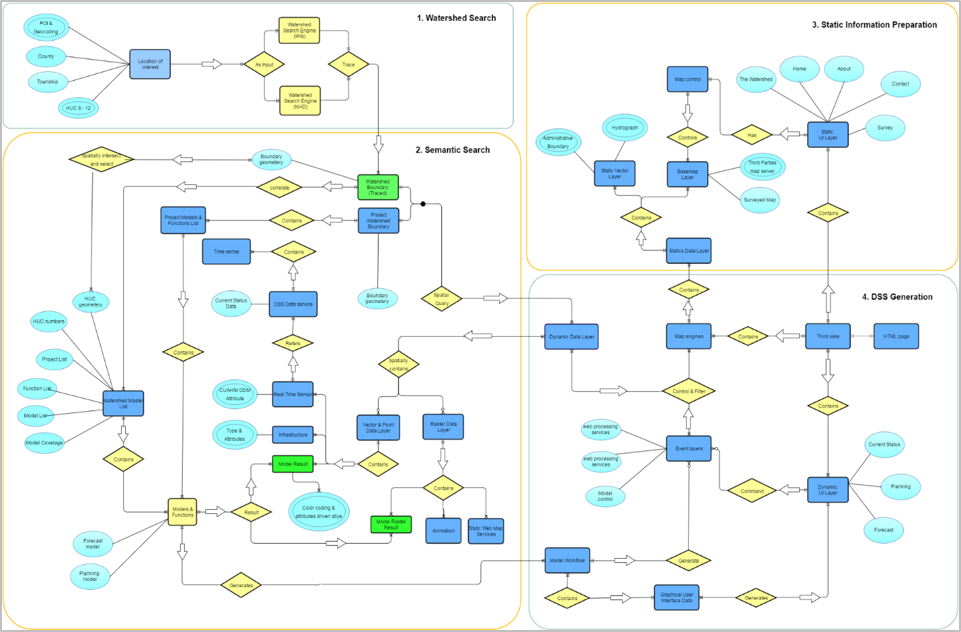

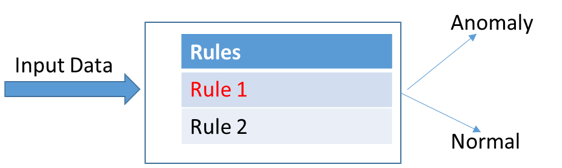

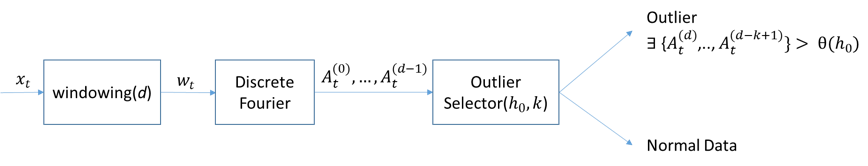

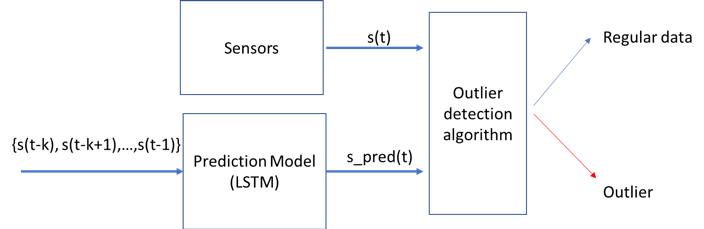

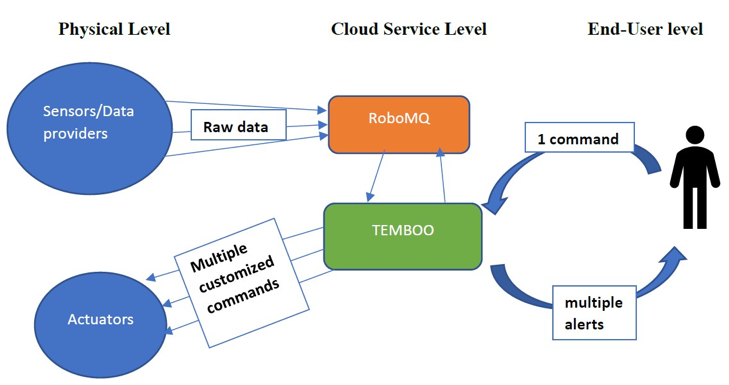

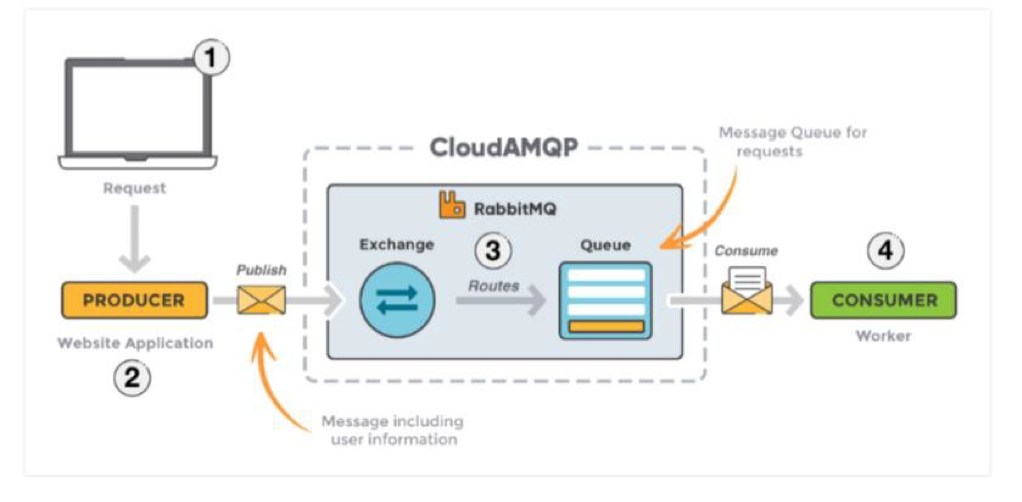

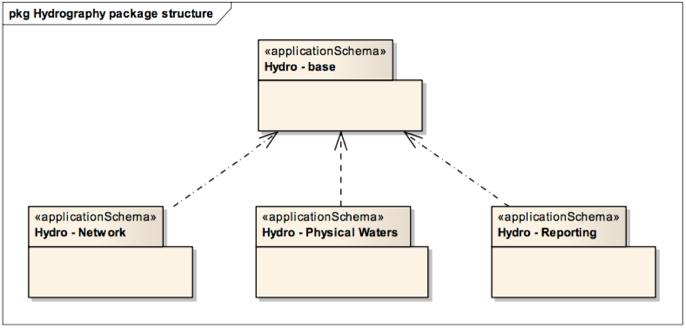

Frequently newly released modelling systems are freely available, and some do lack sophisticated user interfaces for pre- and post-processing of data and modelling results. Different private companies develop such components around the freely available modelling systems and offer them as commercial products and services (e.g. Bentley, Aquaveo). In addition, many academic centres, such as universities and research institutes maintain freely available academic software, which sometimes develops into larger open source projects for water modelling software.