D1.1 Technology survey: Prospective and challenges - Revised version (2018)

4 ICT based systems for monitoring, control and decision support

4.3 Technologies for near-real-time measurements, leakage detection and localization

According with National Oceanic and Atmospheric Administration (NOAA) the climate, weather, ecosystem and other environmental data (used by scientists, engineers, resource managers, and policy makers) face with an increasing volume and diversity and create substantial data management challenges. As support for all defined objectives we will consider the main nine principles for effective data management presented by NOAA [Herlihy, 2015]. During the first phase of the project, we will analyse the specific guidelines that explain and illustrate how the principles could be applied.

- Environmental data should be archived and made accessible;

- Data-generating activities should include adequate resources to support end-to-end data management;

- Environmental data management activities should recognize user needs;

- Effective interagency and international partnerships are essential;

- Metadata are essential for data management;

- Data and metadata require expert stewardship;

- A formal, on-going process, with broad community input, is needed to decide what data to archive and what data not to archive;

- An effective data archive should provide for discovery, access, and integration;

- Effective data management requires a formal, on-going planning process.

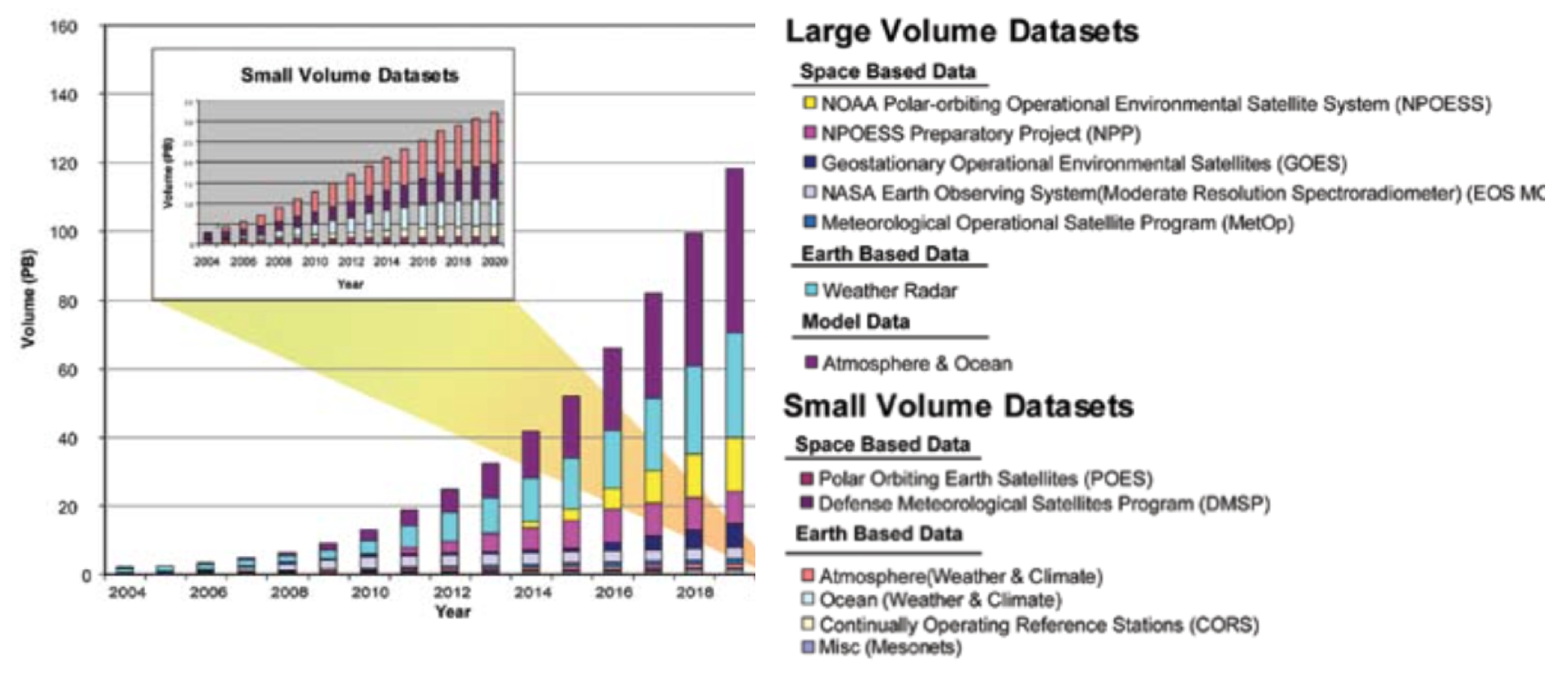

NOAA is projecting a sharp increase in the volume of archived environmental data, from 3.5 petabytes in 2007 to nearly 140 petabytes (140 billion megabytes) by 2020 (see Figure 5). The notion of open data and specifically open government data - information, public or otherwise, which anyone is free to access and re-use for any purpose - has been around for some years. In 2009 open data started to become visible in the mainstream, with various governments (such as the USA, UK, Canada and New Zealand) announcing new initiatives towards opening up their public information. Open data is data that can be freely used, reused and redistributed by anyone - subject only, at most, to the requirement to attribute and share alike. The full Open Definition gives precise details as to what this means. To summarize the most important:

- Availability and Access: the data must be available as a whole and at no more than a reasonable reproduction cost, preferably by downloading over the internet. The data must also be available in a convenient and modifiable form.

- Reuse and Redistribution: the data must be provided under terms that permit reuse and redistribution including the intermixing with other datasets.

- e-Universal Participation: everyone must be able to use, reuse and redistribute - there should be no discrimination against fields of endeavour or against persons or groups. For example, ‘non-commercial’ restrictions that would prevent ‘commercial’ use, or restrictions of use for certain purposes (e.g. only in education), are not allowed.

Figure 5. Archived environmental data: past, present and future (SOURCE: NOAA, 2007).

Standardization, implementation and integration of monitoring platforms based on sensors, exiting e-services for environmental support represents the main technical challenge in implementing integrated platforms for data acquisition.

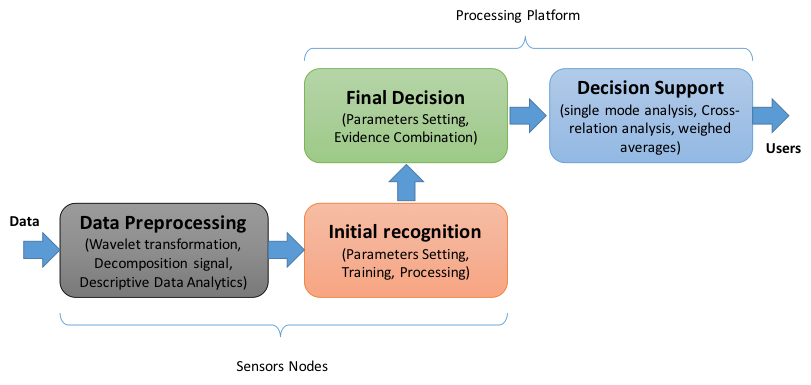

Technologies for near-real-time measurements follow the model of hierarchical pipeline processing. A general model for leak detection and localization method was proposed in [Wan, 2011]. The model is able to separately achieve multiple different levels of leak information processing, which ensures the accuracy and reliability of the diagnosis results. The whole flow model is presented in Figure 6.

Figure 6. Hierarchical model for near-real-time measurements and processing.

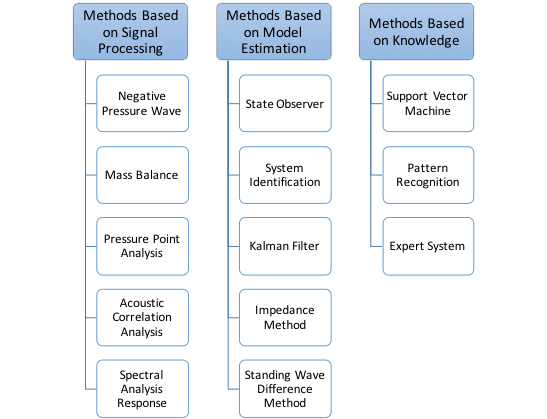

This is a general model, and according with signal processing, model estimation and knowledge we can divide existing pipeline leak detection and localization methods into three categories: Methods Based on Signal Processing, Methods Based on Model Estimation, Methods Based on Knowledge. All these methods use different techniques for data processing, initial recognition, final decision and leak localization ware depicted in Figure 7.

Figure 7. Leak detection and localization methods.

For example, In-Situ® Products offers solution for real time groundwater monitoring with the following capabilities: Measure and log all required parameters, Monitor performance indicators in real time, Monitor radius-of-influence in real time with the Virtual HERMIT Kit and vented cable extender.